Rishabh Raj, Data Engineering Lead, Giga Technology Centre

Connecting every school to the internet requires accurate, up-to-date data on school locations and connectivity. For Giga’s mission – helping governments connect every school to the internet by 2030 – this data is not just background information, it is the backbone that powers decision making, resource allocation and builds tools and applications like Giga Maps and Giga Meter. But when that data comes from multiple sources including government ministries, mobile operators, and satellite providers, each with different formats and update cycles, building a reliable picture is far from simple.

At Giga, we have learned that collecting data is only the beginning. The real work lies in operationalizing it: transforming fragmented, inconsistent inputs into a consolidated trusted view that everyone, from our research team to government stakeholders to public-facing platform, can rely on.

The reality: Managing data at scale

When Giga launched in 2019, school location and connectivity data arrived through scattered email chains. Government ministries sent spreadsheets in inconsistent formats. Mobile operators shared coverage maps that required standardization. Geolocation data placed schools in town centers instead of actual buildings or sometimes dropped them in the ocean entirely.

Without standardized processes, version control meant filenames like ‘schoolmaster_edited’, ‘schoolmaster_edited_v2’, and ‘schoolmaster_final_FINAL’ were prevalent. Determining the authoritative dataset for a country typically involved reviewing email threads and SharePoint folders to identify the latest version.

This was not sustainable. More critically, when two team members found different numbers for the same metric, for e.g. the number of schools in an area, trust in the data was instantly undermined. With Giga mapping over 2.2 million schools manual data reconciliation would be impossible.

The principle: From many sources, one consolidated view

The solution required rethinking how we handle data from the ground up. Our goal: create a consolidated view that serves product teams, researchers, and government partners equally.

This meant solving several problems simultaneously including:

- standardizing inputs from governments with varying technical capacities and reporting systems.

- validating quality through automated checks that catch errors before they propagate.

- managing conflicts when trusted sources disagree about the same school.

- tracking changes so we can understand how connectivity evolves and report progress accurately.

- serving multiple stakeholders with one consistent dataset that powers internal dashboards, research efforts, and products like Giga Maps and the Giga Meter.

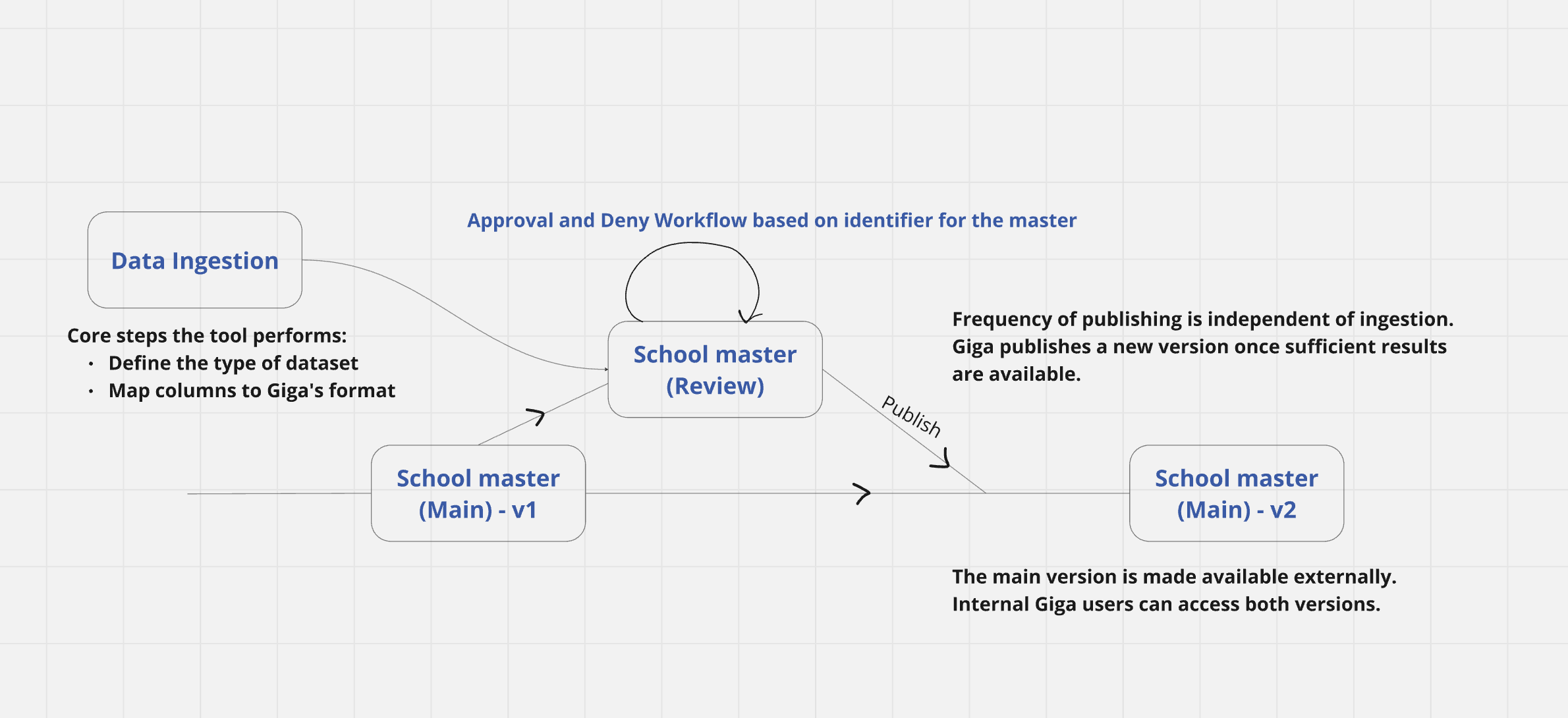

The Lakehouse architecture

The foundation for operationalizing data is built on having a single source of quality-assured consumption-ready data assets for which we can also manage metadata and track lineage enabling governance. The same core datasets enable reporting via open-source BI tooling connected to a performance efficient query layer and serves machine learning pipeline needs. It is also available for any application, like Giga Maps and Giga Meter, to consume via APIs.

We organize our data processing into three conceptual layers similar to the medallion architecture, each serving a distinct purpose:

Bronze: The source layer

Every dataset we receive, whether from a government ministry or mobile network provider lands first in the bronze layer exactly as we got it. No cleaning, no transformation. This preserves the original data and creates an audit trail.

Silver: The clean layer

Data moves from bronze to silver through automated quality checks. These validate structure, location, and basic logic for e.g., confirming that a school lies within national boundaries.

Critical failures are automatically excluded, while non-critical issues are flagged for human review. The result is clean, standardized data aligned to Giga’s internal schema, ready for systems to process, but requires one more layer of review.

Gold: The curated master record

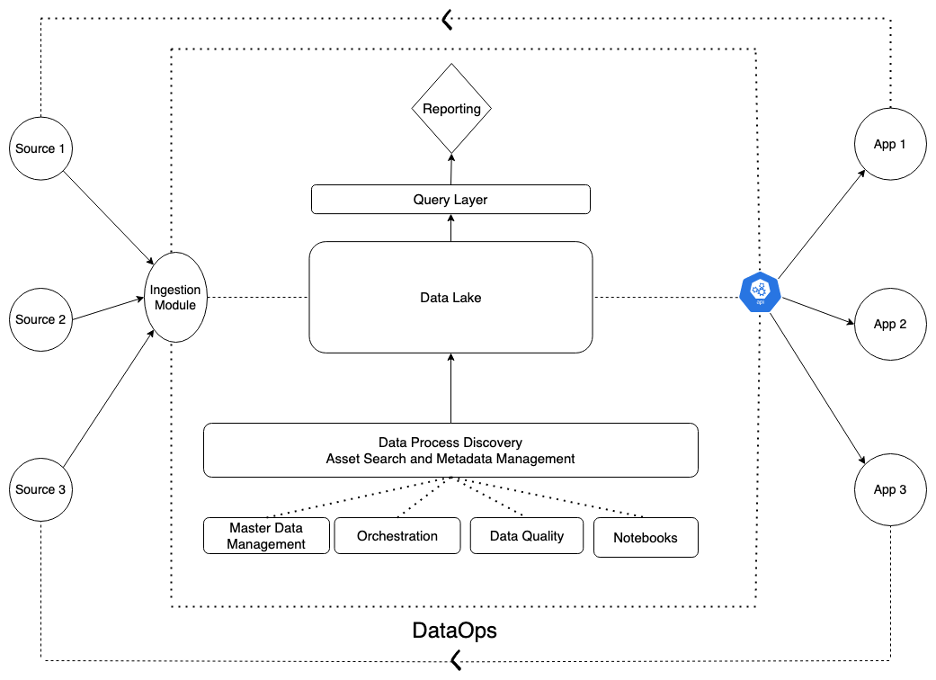

The gold layer represents the master record for each school, the ‘golden record’ compiled from authoritative government sources and partner data. This is where school master data including location, name, education level, merges with connectivity data from multiple partners. When conflicts arise, administrators review proposed changes and approve what advances to gold.

This approval process is critical. For example, if government data reports one school count for an area while another source reports a different figure, an administrator reviews both inputs and determines which count should be treated as authoritative. The gold layer captures that decision and every subsequent change as a new version with full lineage.

Giga ensures data integrity and governance through a set of purpose-built tools and processes:

Schema mapping, data quality reports and validation

Governments submit data in their native format. Our internal tool maps their inputs to Giga’s standard schema and runs automated quality checks spanning geolocation accuracy, categorical data consistency, and duplicate detection. The resulting data quality reports give both Giga teams and government partners visibility into exactly what passed, what failed, and why. This guides discussions with governments about which school data can be used, and which needs attention, for e.g. ,”We can use data from 30 of your 100 schools immediately; here is why the other 70 need further attention.”

Master data management

When updates flow from silver to gold, administrators review proposed changes before they are applied. This human-in-the-lead approach prevents bad data from cascading through our systems while maintaining an approval audit trail.

Versioning

Every change creates a new version. At any point, we can trace how a school’s record has evolved: when its connectivity status changed, who updated its location, which government dataset introduced new enrollment data. This versioning is foundational to DataOps principles: automation, monitoring, and the ability to roll back if needed.

Beyond infrastructure: People and systems working together

Technology alone does not create a consolidated view – people do. The systems we have built enable collaboration. When governments share data, reports become a conversation starter. When connectivity data conflicts with school master data, the approval process forces careful review.

This foundation helps everyone at Giga speak the same language – the people and the products, including Giga Maps and Giga Meter – all draw from the same source.

Research teams use the same validated locations that inform connectivity planning. A number quoted in a presentation matches what appears on Giga Maps and Giga Maps.

The same principles that solved internal challenges have positioned Giga to become a trusted compiler and curator of government data on school location and connectivity. With transparent governance, documented lineage, and consistent quality, anyone seeking comprehensive, reliable information about schools’ connectivity worldwide can turn to Giga as a trusted aggregator of government data.

What’s next

Future posts will explore the telecom infrastructure‑modeling process. This involves gathering data on the distance of schools from existing infrastructure, such as fiber, cellular, and microwave networks, along with contextual data including population density and labor costs. Taken together, these inputs enable each country to identify the optimal connectivity solutions for their schools.

While schools remain Giga’s primary focus for now, the architecture extends to other critical infrastructure. Health centers, community spaces, emergency response facilities: any entity requiring similar data operationalization can flow through bronze, silver, and gold layers.

Operationalizing messy, multi-source data is not a solved problem, it is an ongoing discipline. Processes improve continuously. New data sources emerge. Quality checks evolve as we encounter edge cases. But the principle remains constant: data, systems, and people working together to build the trusted foundation that enables informed decision-making.

Because when you are helping connect 2.2 million schools to the internet, trust in your data is not optional – it is foundational.

About the Author

Rishabh (Sharky) Raj specializes in system design and data architecture that transforms how organizations operationalize data, while also mentoring peers across the tech community. Rishabh works out of the Giga Technology Centre in Barcelona.

For more on how Giga validates school locations using geospatial tools and AI, read our blog: Improving School Location Accuracy for Connectivity: Giga’s Validation Approach